Introduction

Basketball shooting is fundamentally a data-driven skill. Every shot a player takes can be thought of as a training example in a supervised learning pipeline: the input is the player’s position, stance, and ball control; the target label is a successful basket (or miss, which also carries feedback). The more high-quality, correctly labelled examples a player gets, the faster the “model”, in this case, the player’s neuromuscular system can learn the mapping from movement to successful outcome.

Traditional practice without a shooting machine is analogous to a human labelling their own dataset in real-time: retrieving rebounds, repositioning, and resetting takes time, a significant latency that reduces effective throughput and the number of high-quality examples per session. A Basketball Shooting Machine functions as a high-throughput feeder, providing a consistent, reproducible stream of shots to the player with minimal downtime. This lets players focus purely on model fine-tuning: refining form, release, arc, and decision-making under controlled, repeatable conditions.

For coaches and training programs, these machines act as data pipelines. They allow for multi-user scheduling, individual tracking, and automated feedback, creating an analytics-driven practice environment. Metrics like shot percentage, arc consistency, and reaction times can be measured, logged, and visualised much like evaluating model performance metrics (accuracy, loss, variance) after a training epoch. This transforms subjective coaching observations into objective, measurable data.

Parents and home users benefit in similar ways. A portable unit ensures that young athletes can practice with high data density (more shots per session) without fatigue from manual retrieval. It accelerates skill acquisition while maintaining consistent labels, the right form, trajectory, and spacing for each shot.

The Ultimate Guide to Basketball Shooting Machines (2025)

This long-form guide recasts the practical, buyer-focused article into Natural Language Processing (NLP) metaphors and terminology while keeping the original buyer-help intent intact. Coaches, parents, gym owners and program managers will find the same actionable checklists, tests, drills, and ROI math but explained through an NLP lens so you can think about machine selection, evaluation, and maintenance like an ML project: define data (shots), choose models (machines), measure metrics (shots/hour, accuracy), run experiments (5-minute throughput test), and maintain deployments (service & firmware).

Why Basketball Shooting Machines Matter and an NLP framing

In NLP, we talk about training data, throughput, inference latency, and evaluation metrics. In basketball shooting practice, the dataset is the repeated shot; the model is the player’s motor program; and the shooting machine is a data pipeline that supplies labelled examples (catch-and-shoot, pull-up, transition) at high throughput with reproducible conditions.

A good shooting machine is an efficient data feeder; it removes the noisy rebound step (data collection overhead), provides consistent context (spot, trajectory), and lets a coach run controlled experiments (drills). More high-quality labelled repetitions in a session accelerate learning the way more and cleaner training data accelerates an NLP model’s convergence.

Think of your practice as fine-tuning a player-model: the shooting machine provides streaming data, the coach provides loss functions and augmentation (fatigue, defence simulation), and metrics (shot percentage, release time) track progress.

Quick Glossary

- Basketball shooting machine: the data pipeline and feeder for practice examples (shots).

- Shots/hour: throughput (like tokens/sec or samples/hr), manufacturer claim vs. empirical measurement.

- Throughput: same as shots/hour; system capacity under specific conditions.

- Programmable spots: configurable input positions/sampling distribution.

- Analytics/heatmaps: evaluation dashboards (like model diagnostics and confusion matrices).

- The Gun: high-throughput “production inference” systems for facilities (pro-level).

- Dr Dish: feature-rich platforms with a UX, training libraries, and player profiling (like a full ML platform).

- GRIND: a portable “on-device” deployment for coaches on the move.

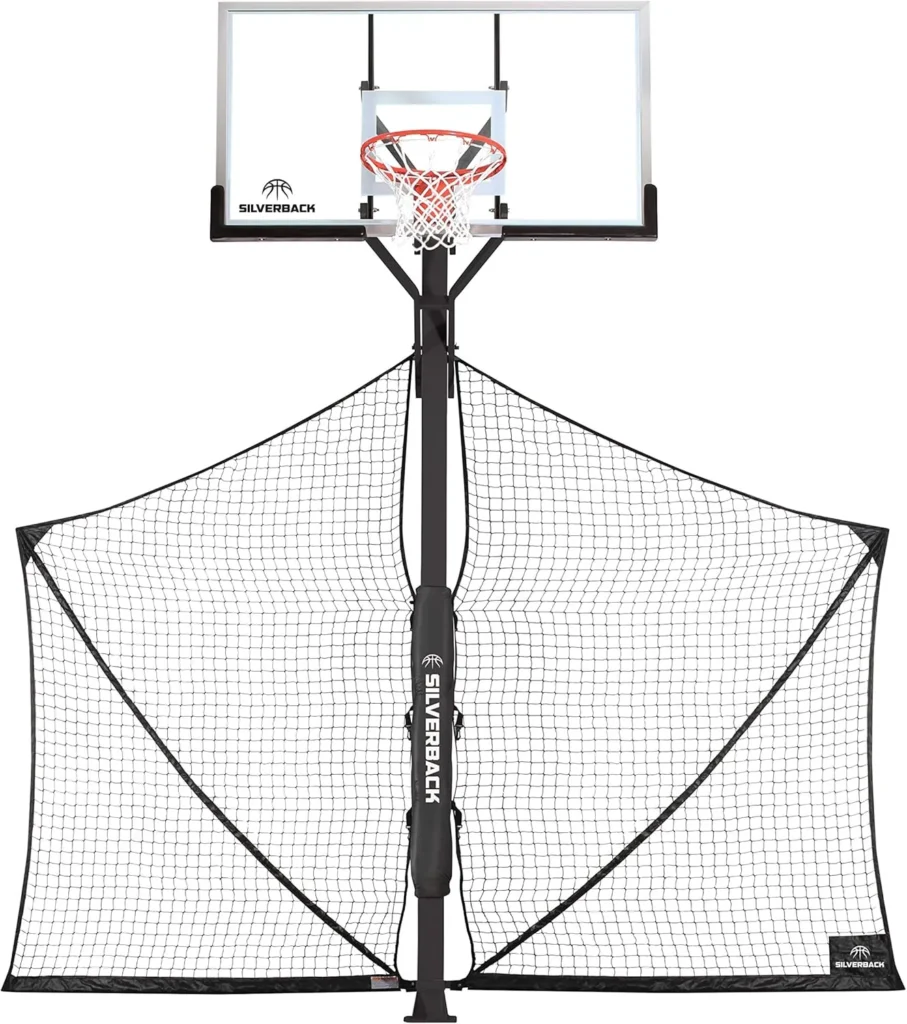

- Return net / rebounder: low-cost samplers; low throughput, limited automation.

Which Type of Basketball Shooting Machine Fits Your Use Case?

Selecting a shooting machine is similar to picking an NLP model or deployment strategy. Determine your workload, expected traffic (practice hours), and desired features (analytics, multi-user support) before choosing.

High-End Programmable Units “On-Prem Enterprise ML”

Best for: high-volume users, schools, training centres, and college programs.

Why: These units are like enterprise ML platforms: robust, multi-user, scalable, with user profiles, workout libraries (pretrained tasks), and advanced analytics. Expect heavy-duty motors (high compute), scheduled maintenance (DevOps), and power needs.

High-Throughput “Gun” Systems “Production Inference Rigs”

Best for: clubs needing maximum sample throughput.

Why: The Gun prioritises raw throughput (shots/hr), wide spot coverage (deployment coverage), and up-time. Think of it as a production inference server designed for intense, continuous use.

Portable Machines “Edge Devices”

Best for: parents, travelling coaches, and small gyms.

Why: Trade throughput and advanced analytics for portability and cost. Like deploying a light-weight model on an edge device: useful, flexible, but with lower capacity.

AI / Vision-Assisted Units“AutoML & Interpretability Tools”

Best for: tech-forward buyers who want guided coaching and form analysis.

Why: These units pair feed mechanics with video analytics, pose estimation, and cues similar to integrating interpretability and automated diagnostics into an ML pipeline. New tech validates promised metrics before purchasing.

Low-Cost Return Nets & DIY “Baseline Samplers”

Best for: total beginners.

Why: Minimal automation, limited throughput, but cheap and simple, useful for bootstrapping practice, but not for scale.

Top Models & Comparative Summary

Manufacturer claims are often benchmarked in ideal lab conditions. Always run an on-court evaluation (protocol below) to validate throughput and durability.

| Model (example) | Best Use Case (Analogue) | Claimed shots/hr (throughput) | Key features (like model attributes) | Typical price (approx.) |

| Dr Dish (Home / CT / CT+) | Enterprise-ish training platform | 1,200 (Home) + | Workouts, player profiles, analytics (dashboard) | Home ~$1,995; CT/CT+ ↑ |

| Shoot-A-Way The Gun | Production inference at scale | up to ~1,800 | 200+ spots, heatmaps, large display (ops console) | Dealer quote (pro) |

| GRIND Machine | Edge device (portable) | ~1,000 (claimed) | 12-ft net, lighter, quick setup | ~$1,995 list |

| Hoopfit | AutoML-style coaching | Varies | Vision assist, app workflows | Varies |

| Return nets/rebounders | Baseline sampler | Variable | Low cost, portable | $50–$300 |

Treat this like a model-card snapshot: note claimed throughput, latency (time between feeds), memory (ball capacity), and maintenance (service levels)

Overview of Dr. Dish vs. Shoot-A-Way

- Dr Dish — a full-stack product platform with UX, guided content, and player management (think: ML platform with pretrained tasks and user dashboards).

- The Gun — a throughput-optimised inference appliance for heavy production use, designed to keep data flowing for many simultaneous clients.

Analytics & UI

- Dr Dish: touchscreen, guided lesson pipelines, player-level histories (user profiles).

- The Gun: heatmaps and large displays oriented toward immediate diagnostics and competitive metrics.

Throughput & Durability (SLA & hardware)

- The Gun typically advertises higher peak throughput for pro deployments.

- Dr Dish scales: Home model for portability, CT/CT+ for heavier institutional use.

Portability

- Dr Dish Home < Dr Dish CT < The Gun (increasing size/weight).

- GRIND is portable, think on-device light deployment.

Price & Financing

- Dr Dish Home: commonly seen near $1,995 (promotions vary).

- The Gun pro-unit pricing: quote-based expects higher TCO.

How to Choose the Right Basketball Shooting Machine

Framing this like an ML procurement checklist will help you treat the purchase as a project rather than an impulse buy.

Intended Use: Home, Small Group, or Full Team?

Define your workload: hours/week × players/session = sample demand. If you’ll run long sessions or many players per week, choose for throughput and serviceability.

Realistic Shots per Hour

Manufacturer throughput is like vendor-reported benchmarks test with your own protocol (see 5-minute test below) that replicates your environment. Conditions matter: ball type, indoor temperature, flooring, and how many human operators are assisting.

Programmability & Spots

Programmable spots let you shape the sampling distribution of shots, crucial if you’re building specialised training distributions (e.g., more corner threes for a system that prizes corner shooting).

Analytics & Integrations

If you want to measure progress across players, export data for leaderboards, or integrate video overlays, choose machines with exportable analytics and open APIs (or at least export CSV).

Portability & Storage

Consider weight, wheel design, and the number of staff required to move the unit. If your facility rearranges courts daily, portability is crucial.

Service & Warranty

Local technicians and fast spare parts are equivalent to having responsive DevOps — downtime costs, training opportunities and morale.

Total Cost of Ownership (TCO)

TCO = purchase price + shipping + installation + subscriptions + spare parts + service visits + staff time. Model this over expected lifetime shots to compute cost/shot.

Real-World Performance: Validation Protocols, Durability Tests & ROI

Treat performance testing like evaluating a model before deployment. Have a reproducible protocol, document all variables, and publish your method for transparency.

Simple On-Court Throughput Test

- Environment: Charge or plug in the machine, use a standardised ball type and inflation pressure, and use the same court surface and spot setup every time. Log conditions.

- Drill selection: Select a continuous feed drill (no long pauses). If the machine has counters, use them; otherwise, record video.

- Record for 5 minutes precisely and count every ball delivered to the shooter (or use the machine’s counter).

- Scale: Multiply the 5-minute count × 12 = estimated shots/hour. Report variance across 3 runs to show stability.

- Publish: Include video, the spreadsheet (raw counts), and notes (operator, ball inflation, ambient temperature). This is your evaluation notebook.

Durability Testing

- Soak test: Run an 8-hour continuous-use simulation and log jams per 1,000 shots, motor temps, and error codes.

- Failure modes: Track frequency and time-to-fix for each failure. Jams, belt wear, sensor drift, and motor overheating are typical.

ROI Example cost-per-shot as a unit-economics metric

Use conservative lifetime shots and realistic service costs.

Example:

- Machine cost: $3,500

- Expected lifetime shots: 200,000

- Purchase cost per shot: $3,500 ÷ 200,000 = $0.0175 per shot.

- Add service: $300/year × 5 yrs = $1,500 → $1,500 ÷ 200,000 = $0.0075 per shot.

- Total cost per shot = $0.0175 + $0.0075 = $0.025 per shot.

Compare to coaching rates, additional facility revenues, or player development outcomes to decide ROI.

Battle-Tested Drills

Each drill is framed like a training batch with purpose (task), setup (dataset & prompt), reps (batch size), and coaching cues (loss reduction/gradient hints).

Catch-and-Shoot Circuit “Supervised Batch: Rhythm Learning”

- Purpose: Improve quick release and rhythm (optimise release time).

- Setup: 3 stations; machine cycles between spots.

- Reps: 5 minutes per station ≈ , 60 shots.

- Coaching cues: Quick feet, focus on a stable release, think of this as minimising variance in output.

Pull-Up & Reset “On-the-fly Inference”

- Purpose: Off-dribble shooting under changing state.

- Setup: Machine feeds to wing; player dribbles 1–2 times, pulls up.

- Reps: 8 sets × 8 = 64.

- Cue: Maintain elbow alignment and consistent pre-shot routine (reduce domain shift).

Spot-To-Spot Game “Adversarial Sampling”

- Purpose: Conditioning + pressure (simulate adversarial conditions).

- Setup: Randomised machine rotations; players compete for makes.

- Cue: Sustain quality under load.

Numbered Spots “Conditional Sampling / Response Task”

- Purpose: Reaction-to-cues and cognitive coupling.

- Setup: Coach calls numbers; machine feeds that spot.

- Progression: Increase call speed to tighten decision latency.

Transition Shots “Low-Latency Inference”

- Purpose: Train late-clock, quick catch-and-shoot.

- Setup: Feeds at angles simulating in-motion catches.

- Cue: Short gather, square up fast.

Elevated Arc Drill “Trajectory Regularisation”

- Purpose: Improve arc and touch (trajectory distribution).

- Setup: Adjust arc setting or reposition the machine.

- Reps: 50 focused shots.

- Cue: Soft touch and high follow-through.

Make-It/Take-It “Precision Objective with Hard Constraints”

- Purpose: Build accuracy under pressure.

- Setup: Make streaks to advance.

- Cue: Calm breathing and focused pre-shot routine.

Multi-Player Leaderboard “Cross-Validation & Leaderboards”

- Purpose: Team motivation and relative performance tracking.

- Set up: Use analytics or a manual scoreboard.

- Cue: Reward consistency across sessions.

Conditioning + Shots “Data Augmentation: Fatigue Injection”

- Purpose: Combine physical load with shooting (domain shift).

- Setup: Add sprints between shots, timed rounds.

- Cue: Control mechanics while fatigued.

Coach-Led Correction Sessions “Iterative Fine-Tuning”

- Purpose: Focus on a single mechanic, then repeat for consolidation.

- Setup: Isolate one variable; use video for before/after comparison.

- Cue: One correction at a time; measure effect over repeated batches.

Maintenance & Care Model Ops for Hardware

Treat maintenance like model retraining and system patching.

Daily

- Wipe sensors and touchscreens (remove noise).

- Clear loose balls from the feed area (prevent pipeline failure).

- Check for loose bolts (check system integrity).

Weekly

- Inspect belts and tensioners.

- Run a 30–60 minute test and listen for anomalies (anomaly detection).

- Tighten bolts and check fasteners.

Monthly / Quarterly

- Lubricate moving parts per the manual.

- Replace belts early if wear is visible (prevent catastrophic failure).

Annual

- Authorised technician: motor check, firmware update, safety inspection.

Common Fixes

- Motor resets: power cycle and consult error codes.

- Sensor cleaning: soft brush & compressed air.

- Ball jams: power down and follow manual safety steps.

Common Buying Mistakes to Avoid

- Buying only on “shots/hour” claims benchmark in your environment.

- Ignoring subscription fees — library and cloud analytics often cost extra.

- Skipping warranty/service checks — warranty is only as good as local support.

- Buying the biggest machine without a plan — match throughput to real usage.

- Not planning storage/movement costs — staff time and physical constraints matter.

Pricing & Financing

Ask vendors for all cost components: base price, shipping, installation, subscriptions, training, spare parts, and demo availability. Look for:

- Demo units or local trials (A/B test before purchase).

- Refurbished or demo-condition units for cost savings.

- Financing options to spread costs (consider interest vs. cash flow).

Quick Buyer’s Checklist

- Intended users & weekly usage hours (workload estimate).

- Required shots per session and machine throughput (capacity planning).

- Portability & storage space available (deployment constraints).

- Warranty length & local service map (SLA & support).

- Recurring fees for analytics/workouts (subscription model).

- Plan for demos & a 5-minute throughput test.

- Maintenance schedule & spare parts availability.

FAQs

A: If your training program uses them regularly (many sessions per week or many players), they are worth it. They multiply reps and improve practice quality. ROI depends on how often you use them and the machine’s lifetime.

A: Most are for indoor use. Some portable units can tolerate a sheltered outdoor space, but avoid rain and extreme weather. Check the machine’s specs for IP ratings.

A: Both are leaders. Dr Dish is great for workout libraries and UX; The Gun is best for pure throughput and pro facility use. Try demos to pick what matches your needs.

A: Run a 5-minute continuous feed test, count delivered balls, then multiply by 12 to estimate shots/hour. Publish video and record conditions.

A: Many machines offer subscription services for cloud analytics or extra workouts. Add these costs to your total budget.

Conclusion

Selecting and using a basketball shooting machine is akin to designing, training, and deploying an NLP model. Every decision from throughput, spot programmability, and portability to analytics integration affects the quality of your training data (shots), the efficiency of your pipeline (practice session), and ultimately the performance metrics (player skill improvement).

For home users, portable machines like Dr Dish Home or GRIND serve as lightweight edge devices: cost-effective, flexible, and sufficient for steady skill improvement. They provide consistent input examples (shots) for a single player or small group, allowing the player “model” to refine its parameters without overinvesting in infrastructure.

For high schools, small clubs, or mid-level academies, mid-tier CT models or used pro units like The Gun can offer enterprise-level throughput and robustness. These machines function as full-fledged training platforms capable of supporting multiple users, analytics, and structured practice routines. Here, throughput and service-level reliability become crucial, much like scaling a production NLP system for multiple concurrent users.

For elite academies and professional centres, investing in pro-level units (CT+, The Gun) with integrated analytics and video capture is analogous to deploying a high-capacity, production-ready model. These setups maximise both throughput and diagnostic feedback, enabling advanced coaching strategies, continuous monitoring, and precise performance measurement over time.

Across all use cases, one principle holds: always validate throughput, test durability, and log measurable performance metrics. Think of your 5-minute shots/hour test, soak tests, and player case studies as reproducible experiments in a research pipeline. The more transparent your methodology, the higher your confidence in selecting the right model (machine).

Final Notes

Finally, the ROI calculation is your “cost per sample” analysis. By factoring purchase price, service costs, and expected lifetime shots, you can objectively compare alternatives and justify investment decisions. Combined with proper maintenance, analytics, and structured drills, the right basketball shooting machine transforms a player’s practice from ad-hoc repetition into a data-driven, high-throughput learning system.